I had already written an article about open models on this blog. That was almost two years ago. Even back then, a clear trend was emerging. Models were improving rapidly in terms of their size, slowly overtaking the best model of the time: GPT-3.5. Today, this is a thing of the past. Open models are now more commonplace and have incorporated additional technologies such as reasoning and multimodality. Nevertheless, cloud models still dominate.

However, unbeknownst to me, we are slowly crossing a threshold again. This time, it has more to do with deployment options than capabilities. The models are becoming so compact that a GPU is no longer always necessary for a single user. How is this possible?

The Computational Challenge

First of all, I need to explain a few basics. Language models are among the most computationally intensive systems around. That said, I would like to draw attention to two fundamental bottlenecks: Compute and memory.

On the one hand, we need to perform a lot of matrix multiplication in parallel to generate a single token. While modern CPUs with integrated accelerators like AVX-512 are not completely without hope, they still cannot compete with modern GPUs by a long shot. In a standard language model, all parameters, which amount to several gigabytes in size, must be processed for each token. Additionally, this must be done several times per second to provide a reasonably fluid experience. As a result, memory bandwidth is also important.

Ideally, we would want the fastest memory for our processing unit, but this would be too expensive for a reasonable size. Here, GPUs have an advantage, as their HBM3 or GDDR7 memory speeds usually far exceed normal DDR4/DDR5 RAM speeds. However, these speeds are only available for a limited amount of memory. CPUs can usually access more RAM at a lower price, although perhaps not in the current market situation. This results in their lower token generation speed. Overall, one thing is certain: if you want to run LLMs on weak hardware, you need fewer parameters.

The problem now is that CPU bottlenecks allow for only a limited amount of computational work, rendering the parameter range virtually unusable. If you want to work with CPUs, the sweet spots lays most of the times between 1B – 3B. Today, this still means a comprosive. Even if a modern 1B LLMs like LFM2.5 can approach a Mistral 7B, 1B – 3B is usually not enough.

Breaking the barrier

But we’re not giving up yet! There’s one more trick: The Mixture of Experts model architecture, where not all parameters are addressed per token, can reduce computing power and memory bandwidth requirements. This model divides the original model into smaller models, known as ‚experts‘, each of which has fewer parameters. When a token needs to be generated, one or two of these experts are consulted instead of using all the parameters. Although this process is more complex during training, it can offer significant performance improvements without reducing performance noticeably. This is the key reason why we can use language models with hundreds of billions of parameters.

If you select a number of parameters that keeps the active parameters per token low enough to run on moderate hardware, many more possibilities open up. For the purposes of this article, let’s call models with active parameters below 4B ‚consumer MoEs‘. This category has developed immensely over the last six months, with notable examples being GPT-OSS 20B, Qwen3 30B-A3B and GLM4.7-Flash. Using GPT-OSS 20B alongside reasoning capabilities, we can almost match the performance of the free ChatGPT version.

Of course, I cannot deny that GPUs always have advantages. If something runs smoothly on the CPU, it runs even better on the GPU. I did not consider the context size or hallucination rate. Additionally, quantisation is absolutely required in any case. At such speeds and with such levels of knowledge, applications such as agents are usually ruled out. The speed achieved still lags behind what we are familiar with in everyday life. However, the option to simply order a Hetzner server and use a reasonably powerful language model is still incredibly impressive.

Hybrid architectures

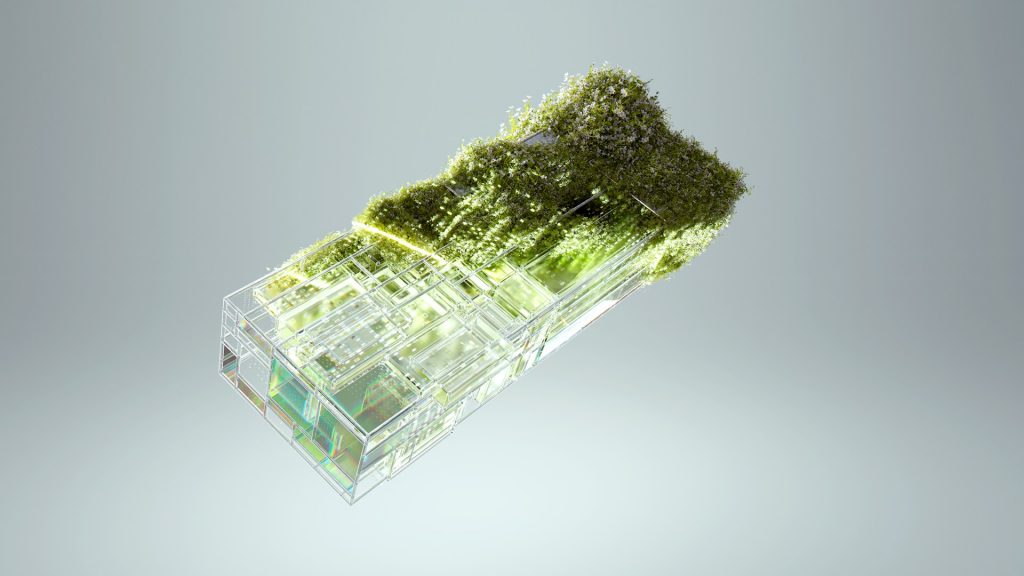

Let’s be realistic. The hardware requirements of language models alone are already causing problems for the system architecture of many medium-sized companies – at least here in Germany. Cloud-only products still often lead to more complicated system architectures, when the system is not cloud based. At the same time, the integration of such systems often reveals that the required knowledge management is outdated. This usually needs to be rebuilt and modernised, which results in more work and cost than anticipated. Add to this the need for technological redundancy, which is currently increasing due to global uncertainties, and sometimes the only hardware budget available to such companies is for old graphics cards like an RTX 3060. Using existing hardware and system architecture more efficiently contributes significantly to sustainability of these kind of projects.

This redundancy is actually very useful. Of course, the cloud is cheaper in this case. But for many people, computers is still something local, and not just in terms of understanding. And not being essentially dependent on a single provider helps. Even if the entire AI cloud were to disappear tomorrow, we would at least be able to keep what we have so far. And I don’t know if that would be enough for many people.

Schreibe einen Kommentar